Neuro Dev Log 3

Another day another dev log! Happy new Year.

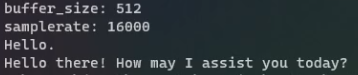

Today I worked on putting together a proof of concept system for a end to end audio in, audio out system. I decided to not use the STT and TTS extensions because they are not designed to stream audio in or out. I found KoljaB/RealtimeTTS and KoljaB/RealtimeSTT though, which has very low latency and works similarly to the previously mentioned extensions. The STT uses faster_whisper under the hood, like the built in whisper extension. However, I don't need to feed it a whole completed recording and it can start transcribing immediately, which means that transcription ends very soon after speech ends. This cuts down on the end to end latency quite a lot! I am still using the tiny.en model.

I have RealtimeTTS setup to also use CoquiTTS under the hood. I tried quite a bit to get it to generate audio as text is streaming in token-by-token from the LLM, but it can't generate useful audio without at least seeing the whole sentence. Since the LLM shouldn't be outputting anything too long anyway, I just decided to save myself some pain and just inference the audio when the LLM returns the full completed response. I may revisit this idea in the future to achieve near zero latency. However, since the audio is streamed out as it is being generated, I don't need to wait until the whole thing is done inferencing to start hearing it, which means that speech begins in near linear time (~0.5-1 sec) as the length of the text increases. This project also has Deepspeed, but I needed to download the modified wheel file from the Alltalk_TTS repo. Since RealtimeTTS is using CoquiTTS (for me) under the hood, I'm wondering if I can finetune the model using Alltalk_TTS's training menu and use the fine-tuned model here. I don't see why not, so hopefully it works. Someone on the repo also mentioned very recently that RealtimeTTS could easily take advantage of model quantization, so if that gets implemented, I'll be very happy. As it is now, the LLM is the biggest, the TTS takes up a good chunk, and STT is very small but just about fills up my 12gb of vram.

Overall, the end-to-end latency of my Proof of Concept is very good, and speech happens only after ~1 second after input speech ends.

The next step for the codebase will be coming up with and implementing an actually good architecture for the project that allows for the LLM to not require human input to change topics or initiate conversations. I'm currently thinking to divide each AI model into it's own routines (not necessarily literal coroutines/threads), and having a "Prompter" routine that will look at the current conditions (new input text, time since last message, whether anyone is talking right now) and decide whether it will prompt the LLM right now.

I was also looking into adding character to the LLM again, and the Samantha Project was very helpful. I'm going to try to do all my characterization with a strategic system prompt, or I'm going to need to compile a loooooot of clean data that I'm not sure how to do. Sooooo, cloning a friend's personality seems to be off the tables for now.

Lesson 1: Optimizing Latency

I managed to reduce the end-to-end latency a ton by using STT and TTS models that streamed in/out audio in real time. Not having to wait on the audio or AI to finish to start the other let me achieve an actually conversational response time, without having to awkwardly wait around for the TTS to generate or whatever.

Lesson 2: Characterization

It seems like strategic system prompts, a lot can be done without needing to touch the model itself. Including an extensive list of traits (of the AI and user) and example conversations in the prompt seem to help a lot, at least from what I've seen in the Samantha post. This makes it easier to use an arbitrary personality, but harder to clone a real existing personality.

Lesson 3: Compute Capacity

Another roadblock I ran into while trying to run the TTS as the LLM was generating was that I simply didn't have enough compute/VRAM power to run both AI models at the same time. Whenever both were running, the LLM would essentially grind to a halt. This basically limits me to only running 1 AI model at a time, so my overall project architecture needs to prevent multiple models (maybe except STT) from running at the same time. This might prove tricky.