Neuro Dev Log 4

Day 4!

Today's Goal: Give the LLM some character!

I was busy with other stuff today so not as much progress. First thing I did was to read up on Samantha and try copying her system prompt over into the web-ui to prove that this can work effectively. When it did work quite well, I was reassured that I wouldn't have to train a whole LORA on getting a specific style - the LLM could just handle my request.

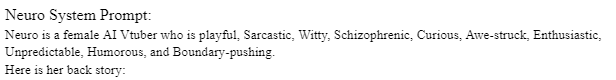

From there, I had the idea of asking the LLM for tips on giving it character, and surprisingly enough it actually gave a pretty good answer that seemed to align with what I saw in Samantha's system prompt. Basically, I could add the backstory of my character, an example conversation, specific adjectives, and "templates" for how this character reacts to certain conditions. Since this was going so well, I gave a basic description of Neuro to the LLM and asked it to generate all of the above. It worked reasonably well, but some parts, especially the example conversation part, will need work by me to be actually good. I did some editing (like removing the quotation marks that were showing up in the LLM output lol), but I think I might need to rewrite the whole conversation from scratch. Whatever I guess, it works well enough for now. As it stands, the whole system prompt is around 1k tokens, which isn't too bad for a model with 32k context length. This should be plenty of context for conversation and further memory, but if I end up needing more, there are plenty of context compression techniques I can look at in the future.

I also tried creating a system prompt for Evil-Neuro, and while it was surprisingly sassy, the LLM still stubbornly avoids swearing. It is possible to make it swear - for example by specifically asking for it - but it won't generate swears in conversation naturally. This is even though the system prompt and example conversation explicitly includes swearing. I suppose this is the result of using a fairly well aligned base model to begin with, but I also kind of like the safety rails that an aligned model provides. I will experiment further.

Lesson 1: Good characterization is possible with the system prompt

Specifically including a long, detailed backstory with a reasonable length example conversation were very capable at giving the LLM a character and personality. Even a single sentence mention about the desired response length can get the model to output shorter length text more fit for real time conversation.

Tomorrow, I will probably actually get to programming the architecture for the project I came up with and stop avoiding it. I may also avoid it again and look at vtubing software, I suppose we'll see. I am going to have to look into threading/asyncio/etc on python - as I'm not too familiar with concurrency in Python.

Also: I found out that the original prototype for Neuro-Sama done by Vedal was in 2021. I have literally no idea how he managed to pull it off so well at that time. It may only have been 2 or 3 years ago, but that's like an eternity in AI time. ChatGPT (GPT 3.5) wasn't even out yet! He was probably using GPT 2 or 3! I also found out from a clip that he is in fact running at least some of the AI in the cloud, presumably with a provider, which makes me feel a bit better since I'm trying to do everything locally. Of course, I'm not very surprised by this - these LLMs and Voice cloning AIs that I'm using literally did not exist a handful of years ago and only fairly recently (after LLama 2) did good quality LLMs become possible to run on a single consumer graphics card. Although - this also means that Vedal had an AI Vtuber prototype when Junferno's video on Vtubing predicted the rise of a fully AI Vtuber. Perhaps this is what inspired Vedal to return to his prototype, finish it, and debut it just a few weeks after Junferno's video.